I evaluate LLMs based on pragmatic metrics. Will it make my life better? Will it improve my productivity? Here’s what you need to know about Meta’s new Llama 3:

- Meta released two versions of Llama 3 LLMs on April 19, 2024, with 8 billion and 70 billion parameters, trained on 15 trillion tokens from publicly available data.

- Llama 3 Groq: You can experiment with these models on platforms like Groq.com. Available on apps such as WhatsApp, Messenger, and Instagram for select users (US).

- The Llama 3 8B model offers mediocre performance, best suited for offline use with limited hardware.

- The 70B model shows improved capabilities but falls short of the paid competitors like GPT-4. However, it is the best free model. A bit ahead of the free Claude 3 Sonnet.

- A more advanced Llama 3 model with 400 billion parameters, aiming to surpass the performance of GPT-4 should be out in summer.

On April 19, 2024, Meta released Llama-3 language models in two sizes: 8 billion and 70 billion parameters. These models have been trained on a corpus of 15 trillion tokens sourced from publicly available data. The instruction-based models have undergone further fine-tuning using both public instruction datasets and a collection of over 10 million human-annotated examples, ensuring their ability to comprehend and respond to effectively.

Where can you use the new Llama 3?

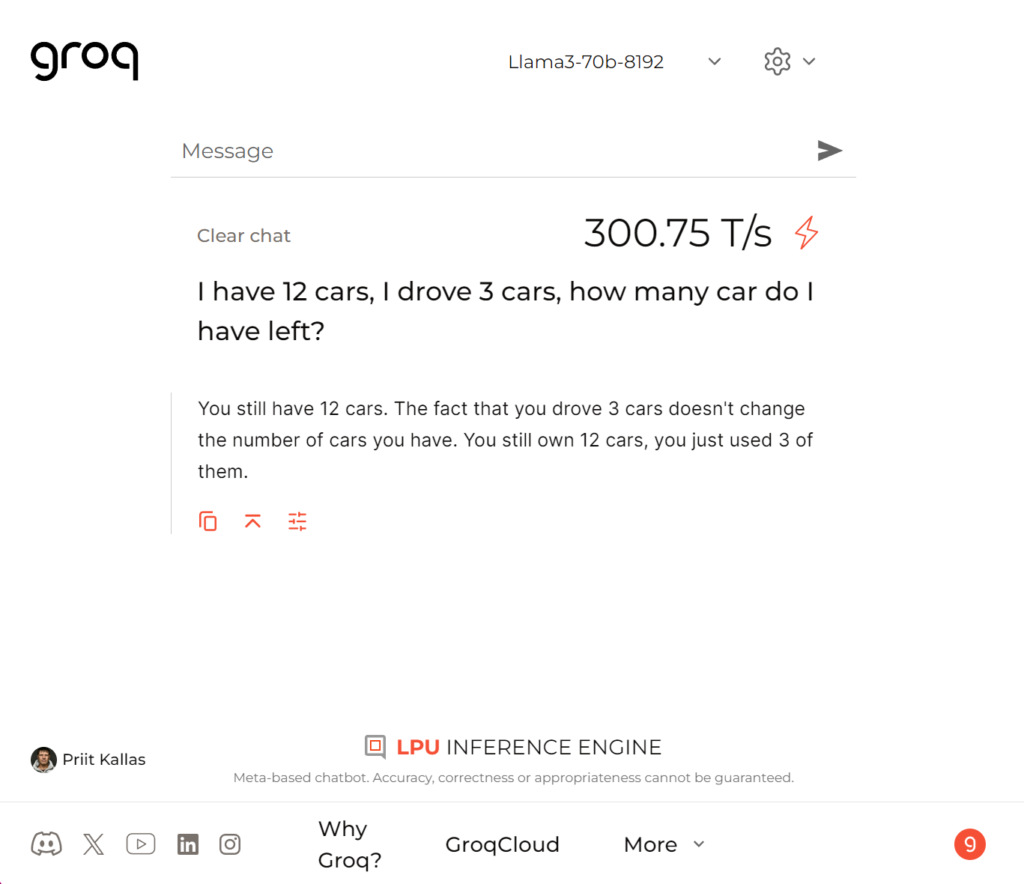

Probably the easiest way to experiment with both of the new Llama models is on https://groq.com/.

Groq is also really fast at running Llama 3

Check out what other AI tools I use daily.

Meta options

There’s also a new website Meta.ai:

The new models are also available in WhatsApp, Messenger, and Instagram for select users.

However, outside USA:

Run Llama 3 offline at home

If you happen to have a powerful computer with a decent GPU, you can run Llama 3 locally on your computer. I have a mid-range gaming rig and it runs Llama 3 8B model quite nicely.

How to use llama 3 at home? I use ollama for running Llama 8B and 70B models. You can find Llama 3 tutorial how to set it up here.

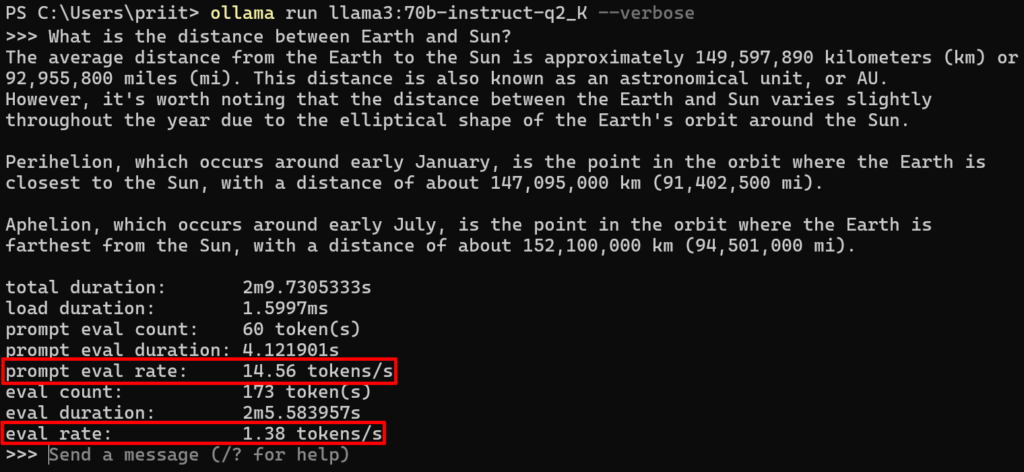

However, running the larger 70B parameter model is a bit on the slow side. With more RAM, better processor, and highest-end graphics card you can run Llama 3 70B reasonably fast.

My medium-range PC with NVIDIA RTX 4060 8GB of video memory runs Llama 3 70B at 1.3 to 1.5 tokens per second. Very slow but not completely unusable:

ollama run llama3:70b-instruct-q2_K

When the most powerful Llama 3 will be released sometime in the summer then you would need some insane hardware to get it to run on your computer at reasonable speed. You would probably need 200GB of RAM just to load the model. As Llama is currently the best open source llm, people will tweak it to run on lower RAM, but it will still not run on an average computer.

Are Llama 3 8B and 70B models any good?

My experiments with Llama 3 8B gave me “meh” results. Really fast when running on Groq but stupid. There’s no reason to use this model over any of the better free models.

There may be specific cases to use Llama 3 8B. For example, you need to run a model without an internet connection and don’t have powerful hardware. Use some meaningful real life prompts to experiment with the Meta Llama 3 models and get the feel of their usefulness.

How good is Llama 3 70B parameters model

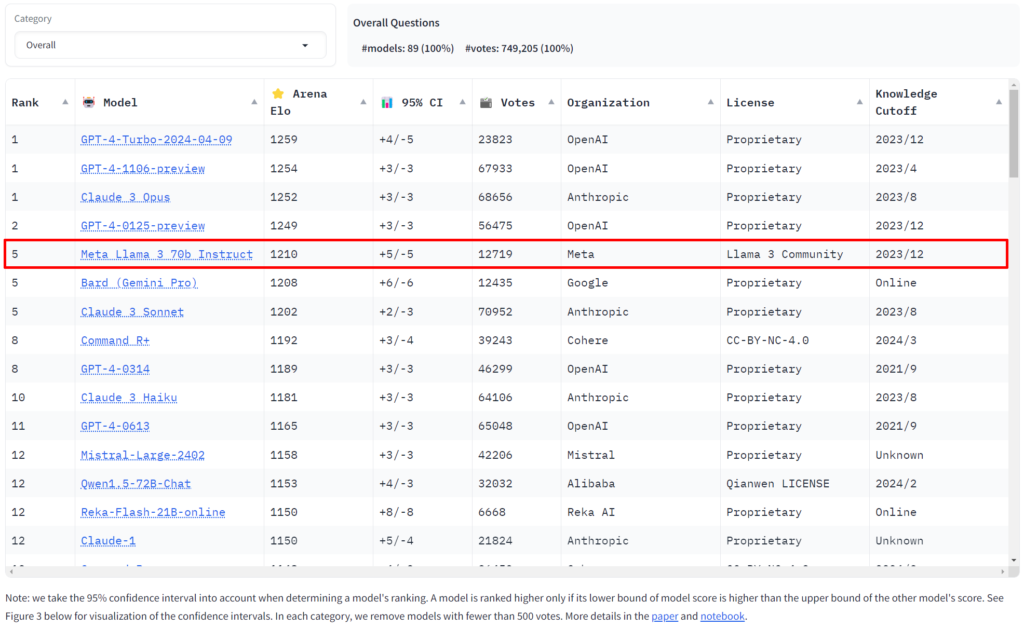

Llama 70B is much better than its 8B small sibling. However, it still falls short of the main competitors, GPT-4 and Claude 3 Opus. Gemini 1.5 Pro is 2 levels above Llama 3 70B.

Early tests show that comparable free LLMs Claude 3 Sonnet and Command R+ are a bit behind Metas new model.

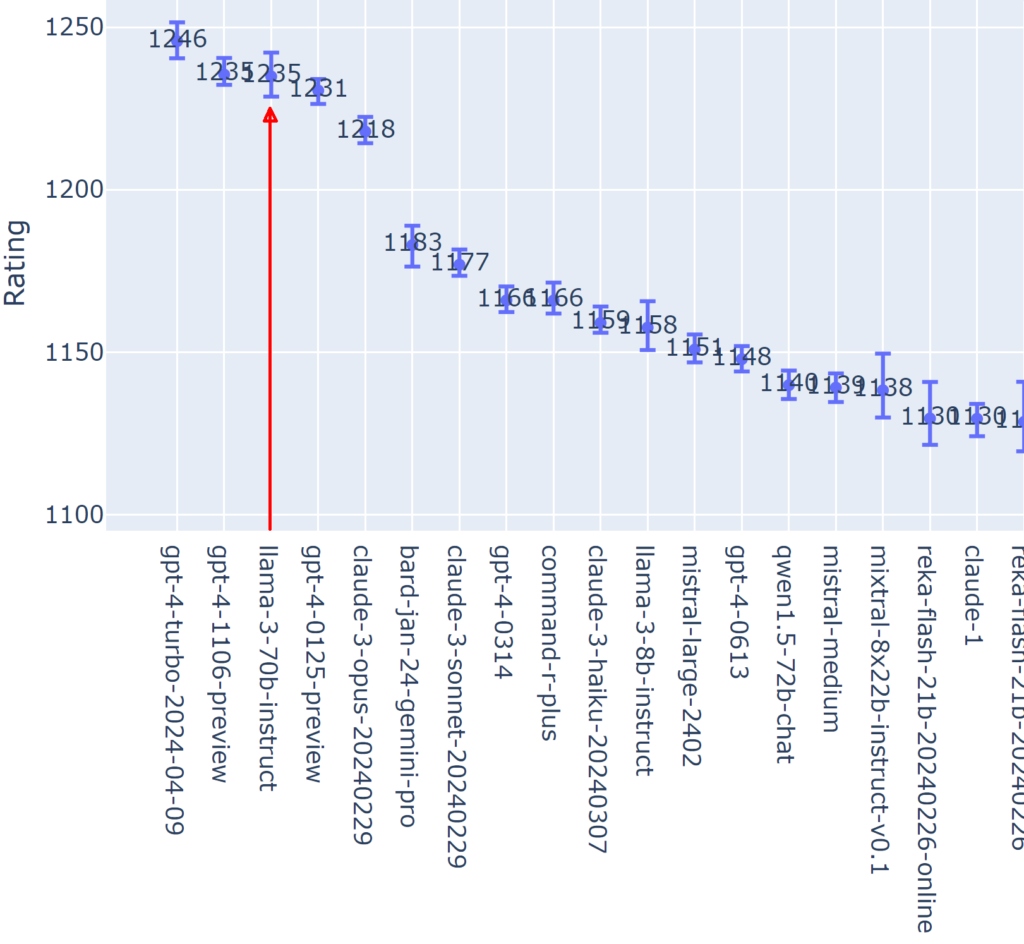

Leaderboard https://chat.lmsys.org/

So, as exiting it is to run an LLM in your computer, when you need to work, there are better paid options available.

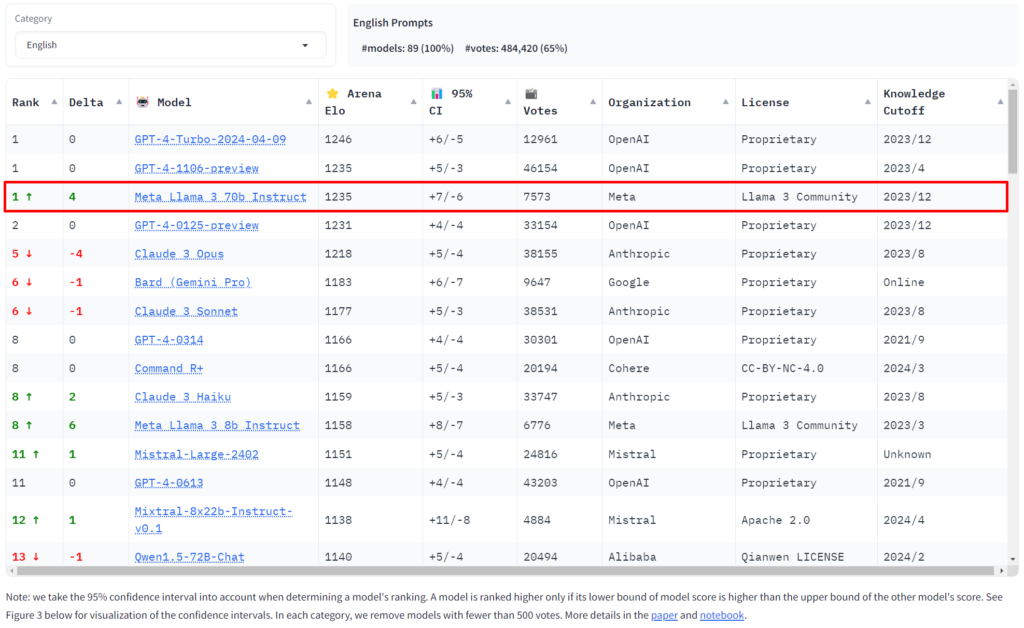

But there’s a but. Llama 3 is not yet built to be multilingual. So, when you only consider tests in the English language Llama 3 70B almost matches the current best GPT-4 models from OpenAI. And it beats Claude 3 Opus.

The LLM leaderboard with only accounting for English prompts:

Keep in mind, that the model has been out for a very short time and the error bars on the data are extremely wide. Over the next couple of weeks as more people submit their tests Llama 3 70B will settle down to its rightful position among competitors.

Of course, Anthropic and OpenAI will now try to improve their models. But when Lama 3 70B gets multilingual then it will probably also raise in the overall rankings. Competition is great and this year we will most likely see some big advances in the LLM capability.

Llama 3 70b hardware to run reasonably fast is beyond what most people have at home. You need a ton of RAM and probably several really good GPUs.

Is Meta Llama 3 open source llm? No!

Let’s say you don’t want to work, but you want to tinker, then Llama 3 with 70 billion parameters is your best bet. It is “open source llm.” Well, not really! You can take a look the license here [https://llama.meta.com/llama3/license/]. For example,

v. You will not use the Llama Materials or any output or results of the Llama Materials to improve any other large language model (excluding Meta Llama 3 or derivative works thereof).

But you have the license to tweak it and improve it so Meta can use it in their commercial products later.

Meta’s Llama 2 release includes only “model weights and starting code” according to their Github page. One could argue that a fully open LLM would include much more than this: it’s not just about code anymore, but how you derived that huge pile of magic numbers that a LLM actually consists of. – opensourceconnections.com

Also, the weights are not publicly provided by Meta. You must apply to get a copy of the weights.

So, you are free to play around with this model. I completely understand that Zuck is not out to spend 10s of billions out of goodness of his heart to share with a common man. It’s business and they are more generous than other big players. However, it is also part of their PR and development strategy.

Future of Meta Llama 3 400B

Meta is currently training an even more advanced version of Llama with over 400 billion parameters. For comparison, GPT-4 has an estimated 1.76 trillion parameters.

Now, it’s clear that every LLM player out there wants to beat GPT-4. Up until now, no one has been able to do it convincingly. All the top models are in the same ballpark with error bars engulfing each other. Some people like Claude 3 Opus more, others use GPT-4.

In their Llama 3 release blog post, Meta reveals that their still-in-training Llama 3 with 400 billion parameters is matching GPT-4. As they say in the blog post:

Please note that this data is based on an early checkpoint of Llama 3 that is still training.

It would be nonsensical for Meta to release Llama 3 400B without topping the chart. Hey, look we made an AI that came in second is not a good message. Even if they plaster the “open source llm” label on it. Most people will never run their own LLMs. Meta along with Google is a direct way to investing in AI stocks.

I hope this helps you understand how the Meta changed the LLM landscape. It’s great if you want to build on top of Meta Llama 3, but nothing special if you just use LLMs for everyday productivity.