You can now get access to Google’s Gemini 1.5 Pro language model with an insane 2 million token context window.

Check out how to share your screen with Google AI Studio and Gemini 2.0 Flash

Update December 22, 2024: Google just dropped a new version of its AI model Google Gemini 1.5 Pro Experimental 1206 & it’s making waves! Here’s what you need to know:

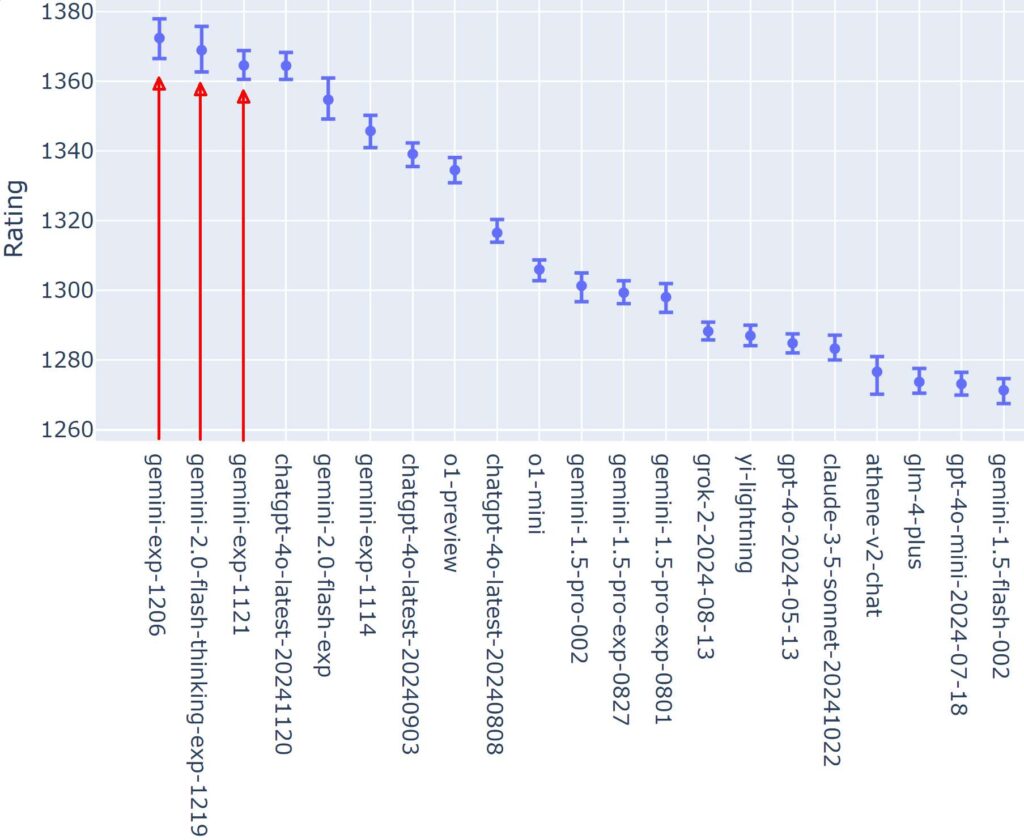

🥇 It beat out the top AI models: Google’s Gemini 1.5 Pro scored 1301 on the LMSYS Chatbot Arena leaderboard. This puts it in direct competition with OpenAI’s GPT-4o and Anthropic’s Claude-3.5 Sonnet. For some time it had the top spot in this specific AI benchmark.

💾 It can handle huge amounts of data: Gemini 1.5 can process up to 2 million tokens. This means it can work with big documents, code, and even audio/video! This is a far larger context window than competing models.

📽 It’s great at many tasks: Gemini 1.5 Pro is top-ranked on the LMSYS Vision Leaderboard. It’s good at different languages, math, and coding. The model excels in multilingual tasks and shows strong performance in technical areas. For example it can analyze your videos.

💯 Early users are excited: Some Reddit users are saying it’s “insanely good” and better than GPT-4o. One Redditor even said it “blows 4o out of the water.”

However, this is a new release. Things might change as more data accumulates from tests.

What does 2 million token context window mean? Well, you could feed the AI hundreds of thousands of words to pre-train it on your data. There are tons of use cases.

If 1 million tokens is a lot, how about 2 million?

Today we’re expanding the context window for Gemini 1.5 Pro to 2 million tokens and making it available for developers in private preview. It’s the next step towards the ultimate goal of infinite context. #GoogleIO pic.twitter.com/3OW77YH4Ec

— Google (@Google) May 14, 2024

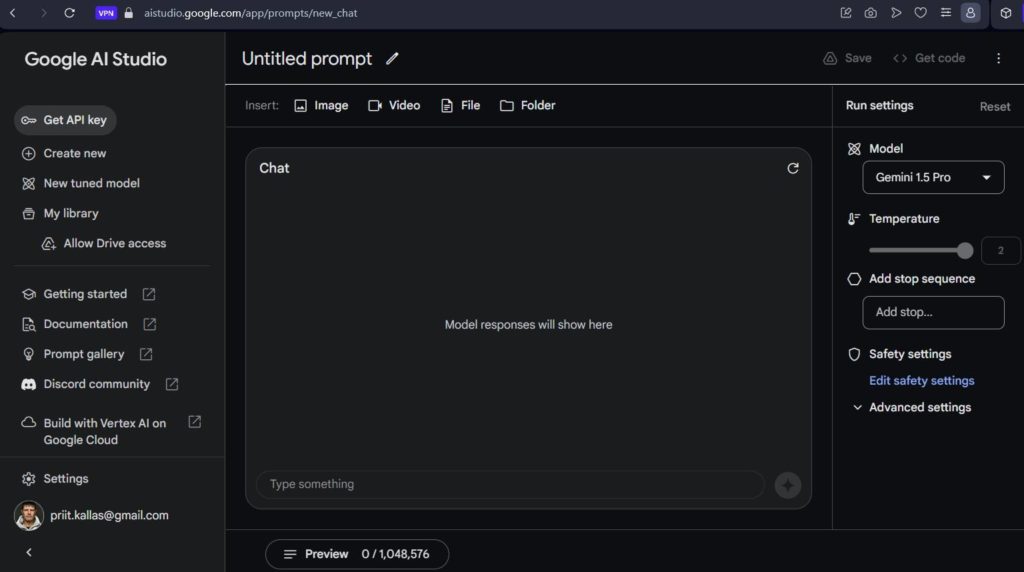

First, how to get access to Gemini 1.5?

You can get access here https://aistudio.google.com/app/prompts/new_chat

Optional steps:

Connect your Google Drive to save conversations.

First experiences with Gemini 1.5

First thing I tested was Gemini token window size. I have a transcript of my 11-hour AI course that is 160 thousand words and about 220 thousand tokens. More than Claude 3.5 or ChatGPT 4o can handle.

I fed it to Gemini 1.5 and asked to make a schedule based on the transcript. It correctly detected that it’s meant to be a 3-day seminar and broke it up into days and subsections. I also asked AI to use my style it detected from the transcript.

The results were great, spot on schedule covering the content of the course and pointing out the value to the person taking the course. Then I asked it to write Linkedin posts based on the transcript and schedule. One covering the whole course and 3 more for each day. As it knew my style, then the resulting posts were not over the top marketing hype but really useful posts I could virtually copy and paste without editing.

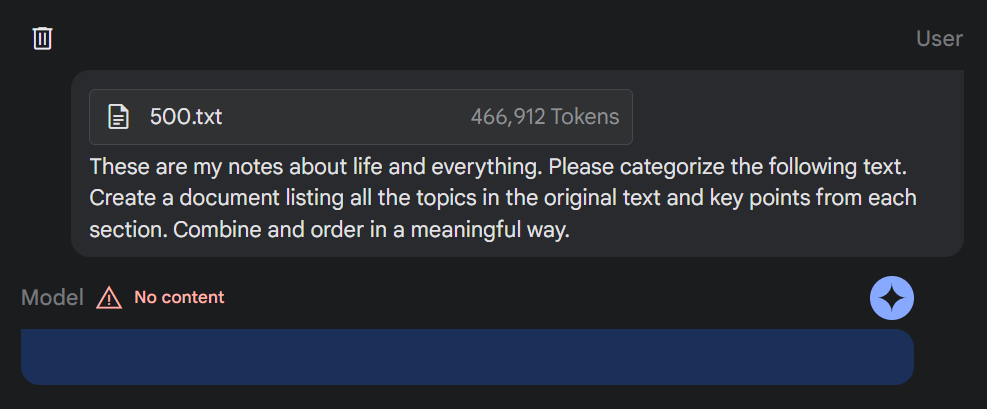

But then it failed. I have a large block of notes I have made over the last 7 years. I tried to feed it to Gemini 1.5 in the prompt. The text is 1.6 million characters or 466 thousand tokens. I got Gemini 1.5 no content error in the prompt. So, I saved everything as a plain txt file, uploaded it to Drive to avoid any connectivity issues, and tried again. Again, “No content” error.

This means that for me Gemini 1.5 input token limit is somewhere between 350 and 466 thousand tokens. This massive context window could be valuable when having long conversations, for example, creating a marketing persona with AI.

Huge context windows are useful for generative AI in banking, finance analysis, and other corporate applications.

Check out what other AI tools I use daily.

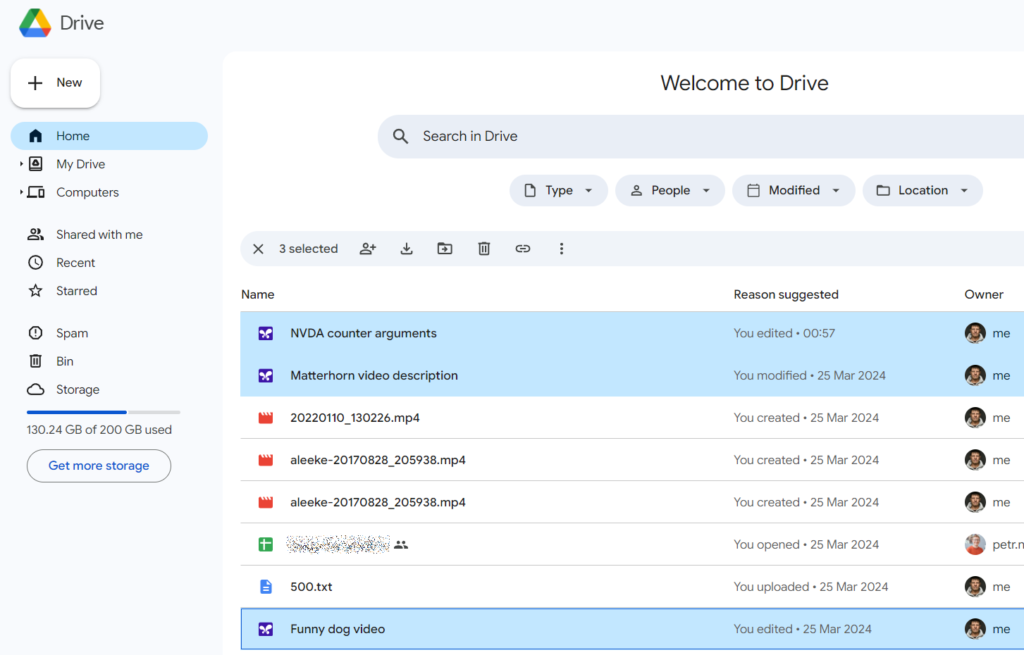

Save conversations to Drive

When you connect Gemini 1.5 to Google Drive then it saves all the conversations into the drive as files. You can also give it access to specific files that you want it to use as a knowledge base in your conversations.

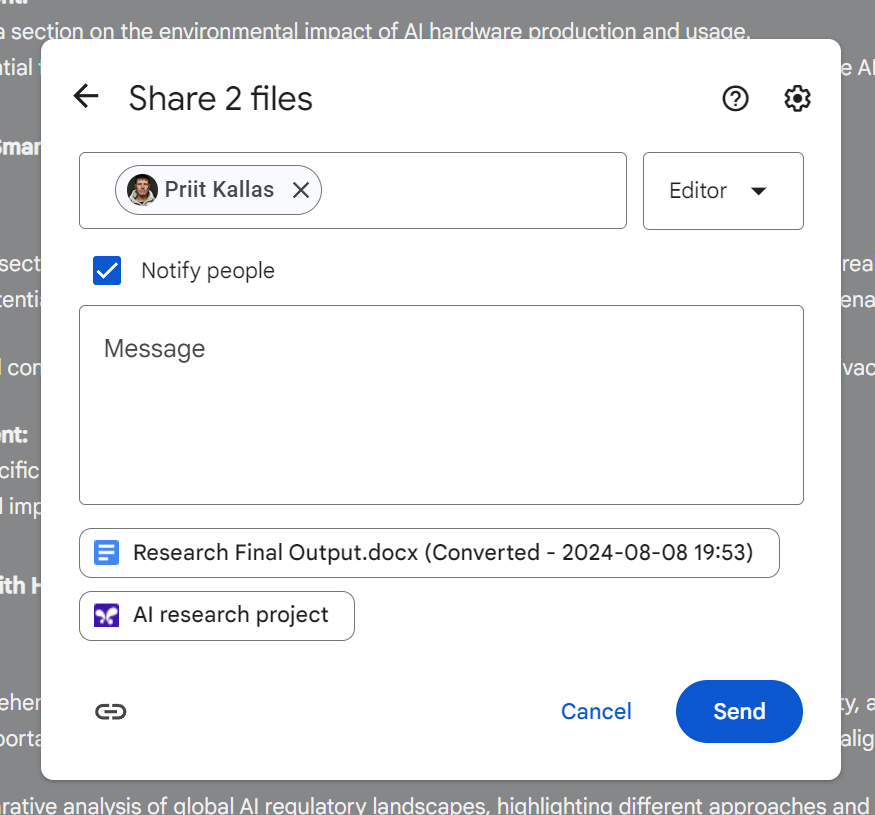

You can also share the conversations with other people. However, this is not shared experience. You will both work on your own copy of the chat and the changes made after the sharing will not be visible to others. Still better than ChatGPT’s sharing that only lets you share a static transcript of the conversation.

Load data from Drive to Google Gemini 1.5 Pro

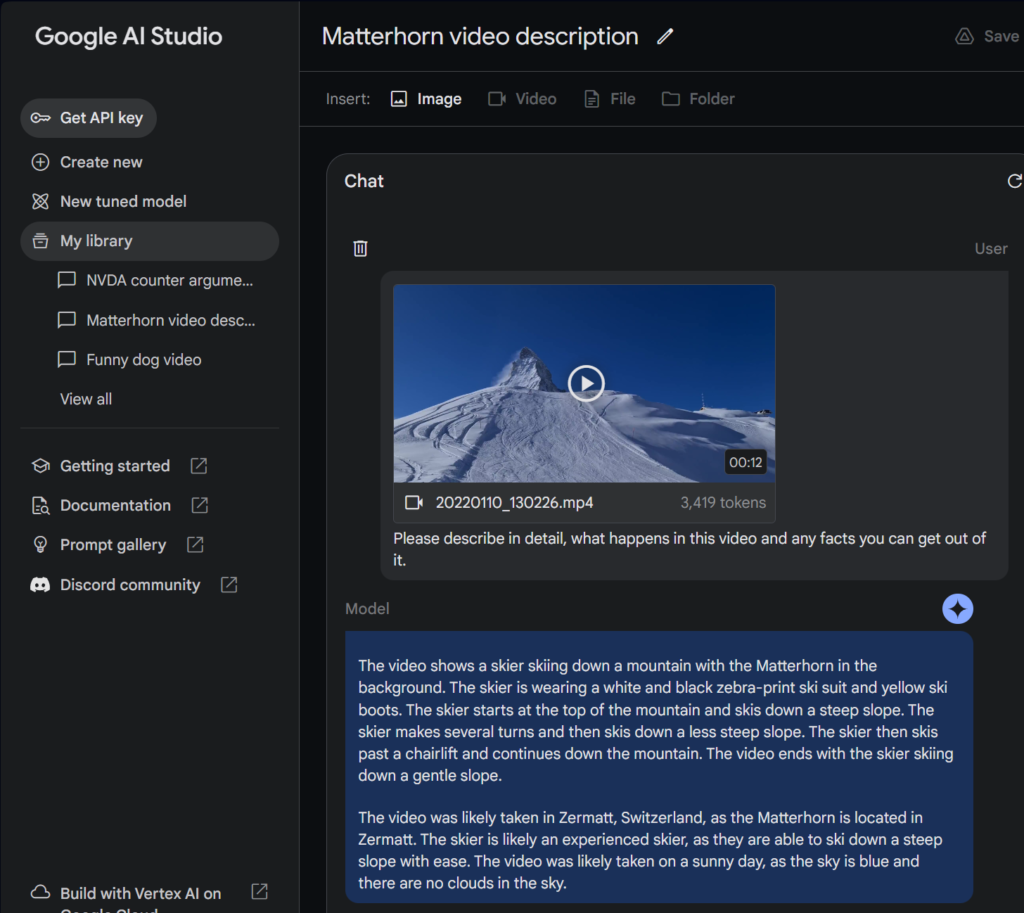

As I connected Gemini to Drive then I could load all needed information from the Drive making sure I don’t have any bandwidth problems uploading large pieces of data. I used it for text files and videos.

Google Gemini 1.5 Video prompts

Next, I wanted to experiment with Gemini’s video capabilities. I fed it a 12-second clip of my wife on a ski slope and asked it to describe the facts in the video. Gemini 1.5 Pro got almost everything right.

I also wanted to know if it understands the sequence of events happening in the video. I asked it to tell me when the skier is closest to the camera. I got the right answer on the first try.

Gemini Pro Vision

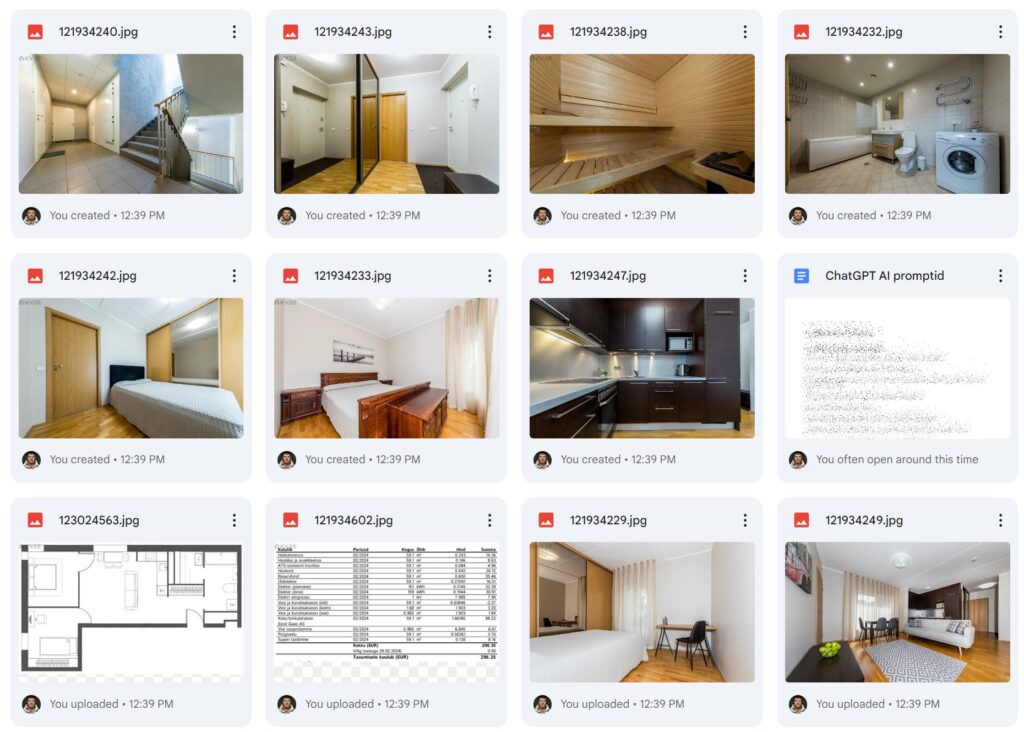

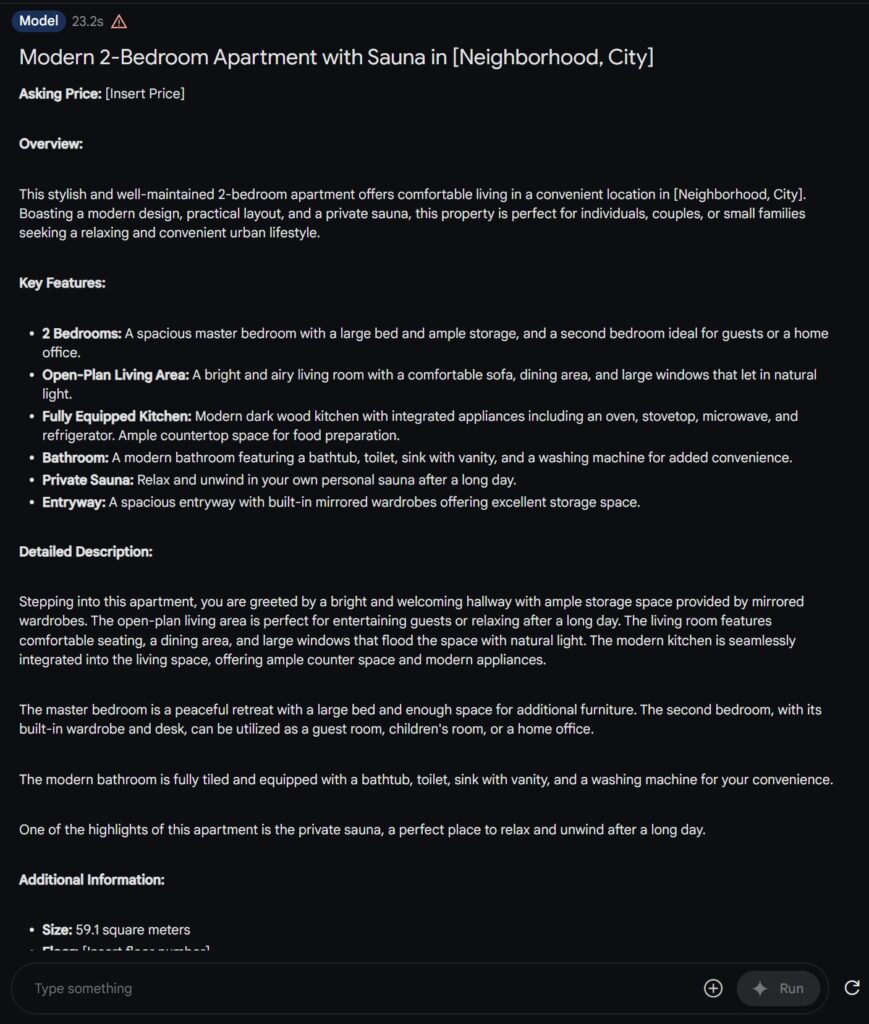

The new Gemini model is seriously impressive in understanding image! I fed it 9 photos of an apartment, the floor plan, and a utility bill

Gemini 1.5 Pro generated a complete real estate listing that’s practically ready to publish. Not only that, I then asked it to translate the listing into Estonian, and it did so with ease, even incorporating the utility cost breakdown into the description. It can analyze all that information and produce such output. Productivity gain for real estate agents is probably 10X in this case.

Other languages

Gemini 1.5 is pretty good with languages other than English. For all the experiments above I use my native Estonian and English. It’s a small language. So, if it works in Estonian it will work with languages that have tens of millions of speakers.

Gemini 1.5 API keys

You can create a new project if you don’t have one already or add API keys to an existing project. All projects are subject to the Google Cloud Platform terms and conditions. It is better than Meta’s Llama 3 70B model.

Google Gemini 1.5 Pro price

Google Gemini 1.5 Pro model preview with a 2 million context window is currently available for free for testing.

Rate Limits**

- 2 RPM (requests per minute)

- 32,000 TPM (tokens per minute)

- 50 RPD (requests per day)

Price (input / output)

- Free of charge

Context caching not applicable.

However, the pricing of this model is be pretty steep when you need larger volumes. Pay-as-you-go plan:

Rate Limits**

- 360 RPM (requests per minute)

- 4 million TPM (tokens per minute)

- 10,000 RPD (requests per day)

Price (input)

- $3.50 / 1 million tokens (for prompts up to 128K tokens)

- $7.00 / 1 million tokens (for prompts longer than 128K)

Context caching (Learn more)

- $0.875 / 1 million tokens (for prompts up to 128K tokens)

- $1.75 / 1 million tokens (for prompts longer than 128K)

- $4.50 / 1 million tokens per hour (storage)

Price (output)

- $10.50 / 1 million tokens (for prompts up to 128K tokens)

- $21.00 / 1 million tokens (for prompts longer than 128K)

Different prompt options

Freeform prompts – These prompts offer an open-ended prompting experience for generating content and responses to instructions. You can use both images and text data for your prompts.

Structured prompts – This prompting technique lets you guide model output by providing a set of example requests and replies. Use this approach when you need more control over the structure of model output.

Chat prompts – Use chat prompts to build conversational experiences. This prompting technique allows for multiple input and response turns to generate output.

Is OpenAI dropping the ball?

Gemini 1.5 answers are on par with ChatGPT paid version. I haven’t tested it extensively. But everything I got out of it in the first couple of hours was OK. But considering the context window there’s no competition, ChatGPT just doesn’t have it. Video is also something that ChatGPT can’t work with.

Up till now Google PR has been “We are almost as good as ChatGPT!!!” They seem to be catching up? Maybe GOOG stock is becoming the best AI investment. And this is Gemini 1.5 Pro the Ultra version should be even better. In a month or so OpenAI will probably release GPT4.5 or 5, but right now Claude 3.5 Sonnet and Gemini are getting more attention even if not market share.

Select few can also check out our podcast about Google AI Studio in Estonian: