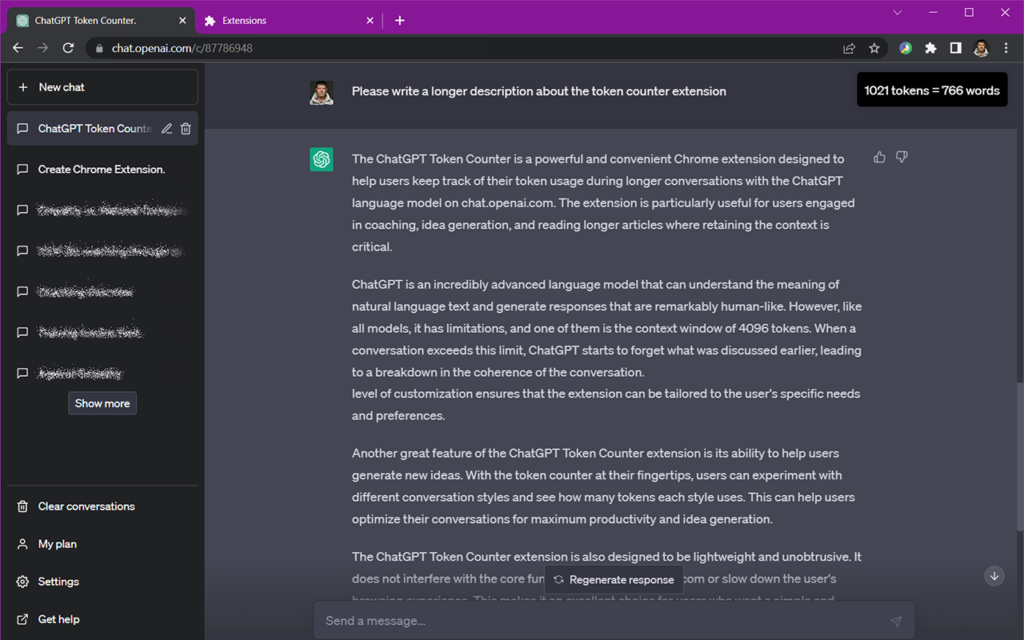

It is important to know what ChatGPT remembers. ChatGPT Token Counter gives you the answer.

I often have long conversations with ChatGPT. This could happen when you are experimenting to jailbreak it or when you want to know the meaning of life. For me, most often, the long conversations tend to be on the following topics

- Programming and debugging

- Writing long-form content

- Mastermind with ChatGPT

- ChatGPT business coach

- Learning new things

ChatGPT has a context window of 4096 tokens, and when the conversation exceeds this limit, the model starts to “forget” what was discussed earlier. To avoid talking about things ChatGPT doesn’t remember you need an AI token counter.

I created an extension that provides a convenient box in the corner of the ChatGPT conversation window and displays the number of tokens or words used in the <main> element of the conversation.

With this extension, you will know when context can be missing and will have more productive conversations with ChatGPT.

You can add the extension to your Google Chrome here. It also works with any other Chromium-based browser like Amazon Silk, Brave, Epic, Microsoft Edge, Opera, etc.

Sign up for cool AI stuff

I share the interesting stuff I find when working with AI-tools. Productivity, creativity, processes, and much more. Get a weekly email with cool stuff about AI.

What is a ChatGPT token?

GPT models, like ChatGPT, work with text using these tokens, which are common bits of characters you’d find in any text. These models are really good at figuring out the relationships between the tokens. They can predict what token should come next in a sequence!

Usually, one token is about the same as 4 characters in English text. That’s about 0.75 of a word. So, if you’ve got 100 tokens, that’s roughly 75 words.

Tokens counters start to lose meaning as the context window gets so big we don’t really care to count tokens anymore. For example, Gemini 1.5 with its massive 1 million token context window.

I made a thing!

No! Not really. ChatGPT made a thing.

This extension is written by ChatGPT with me prompting it to fix the errors and giving the directions when it didn’t get it right! I copied and pasted the code into files. Ran some tests and feed the results back into ChatGPT for improvement.

ChatGPT Token Counter is a simple extension, but I have never created an extension before.

It took me about 6 hours to create the extension, but I wasted about half of that time in the beginning as I went off in the wrong direction. As I realized that I couldn’t fix some weird behavior, I started again from scratch.

The main problem, in the beginning, was that I didn’t really think through what the functionality would be. I kept adding and changing features. This messed up my code, and we couldn’t fix it.

Think before you start doing something. After I rewrote the prompt the way I should have done from the start, I finished the extension in about 2 hours and a bit.

Logo for the ChatGPT Token Counter

I would have liked a nicer logo for the extension, but I decided to go with the first thing that came from the first prompt I pasted in MidJourney. The actual prompt for the icon was:

flat Icon for the GPT token counter extension for Chrome browser –q 2

The logo icon is created 100% by MidJourney but I helped along with Photoshop so that the logo would be on a transparent background.

Support for the extension

ChatGPT Token Counter is an extremely simple Chrome extension. Hopefully there’s nothing to support.

If you find something that could be improved on or flat out doesn’t work, send me an email to let me know.

I hope the extension helps you in your experiments with ChatGPT.

Also Check out the ChatGPT No Enter Chrome Extension.

OpenAI token counter

If you need to be absolutely certain of your text’s token count, then you can use OpenAI’s Tokenizer. However, this AI token counter doesn’t work live in your ChatGPT window. Our GPT Token Counter is a pretty good approximation.