Language problems in business can make work slow and reduce productivity. AI translation tech will fix this in a couple of years by making talking easy in any language, making work more productive and fulfilling.

Imagine landing in Tokyo and effortlessly understanding every word spoken to you, despite not knowing Japanese. Right now, the best way to visit Japan is to always have your own personal Japanese person. Here’s how economist Tyler Cowen uses AI translation with ChatGPT (starts at 17:20):

AI will change how we speak withing a couple of years.

- AI will soon make language barriers vanish, allowing seamless communication.

- Earbuds and AI will translate languages in real time, making everyone you meet speak your language.

- This technology will not only translate but can also adapt tone, vocabulary, and grammar to improve interactions.

- AI can add context to conversations, interpreting cultural and behavioral cues. The technology will extend beyond language, aiding in emotional analysis, accent adaptation, and even non-verbal communication interpretation.

This isn’t a far-off dream but an impending reality with AI technology like large language models. Soon, language barriers will disappear, making all communication seamless and simple. In the coming years AI will change the way we interact with people from different cultures.

Douglas Adams poked fun at technology in Hitchhiker’s Guide to the Galaxy by inventing a Babel fish that lived in people ear and translated their thought to other in real-time. So, from the wearer’s perspective everyone was speaking their language.

Real-time AI translation now!

Real-time translation is already almost possible. When you look around you will also see that most people are already always wearing something in their ears.

Now this is how AI translation is going to play out in a couple of years. As everyone wears their earbuds almost constantly everything they hear can be processed by the computer and AI in their pocket.

So, you get off the plane in Tokyo Narita airport, and the person in the customs booth is speaking your native language perfectly. Of course, you answer in your native language, and they understand everything.

What’s happening is that their voice is actively canceled by your noise-canceling headphones, what they say is translated into your language, and played back to you in your headphones. The same thing happens for them.

Wherever you go everyone is speaking your language accent-free and the mess created during the building of the Babel tower is finally fixed.

Real-time AI translation is 3 to 5 years out

UPDATE: This is hilarious. I wrote this around January 2024. And now we have this.

I still think it takes a bit of time to really integrate translation seamlessly into our lives so we don’t even think about it but. It the key parts are already here.

This is what I wrote in Dec 2023 / Jan 2024:

It will take a couple of years to become mainstream, but this will be commonplace by the end of this decade. When you travel, life will be like a science fiction movie where everyone is speaking the same language.

But this is not all. When technology gets mainstream, it can add many more benefits than just translating.

You can instruct the AI to adjust your tone, vocabulary, grammar, and other features of your verbal communication. For example, it can soften your tone when you get angry. AI translation will increase your productivity and eliminate countless obstacles that make building your business harder or sometimes impossible.

It can perform such basic functions as play back conversations that you have had in the past. No doubt there will be legal issues to tackle to make it happen. But it most likely will happen.

At the same time as AI is actively translating you the other language it can add context that is not present in the text but implied by the cultural and behavioral cues. You will get the commented edition of what the other person is saying based on your predefined preferences.

What else can real-time AI language processing do?

But the technology isn’t limited just to foreign languages. An old person may have hard time when interacting with teenagers. Or it can help you by coaching you through difficult and awkward situations. You will never have the “What do I say now?” moment ever again. Maybe you are listening to a politician and would like to have a broader commentary about what the actual key facts of the issue are. AI can translate your meaning into the language of your ideal customer persona for marketing. And there’s more:

- Accent Adaptation: Automatically adjust accents in real-time to match the listener’s native dialect for easier comprehension.

- Voice Cloning for Personalized Audio Books: Clone voices of favorite actors or personalities to read audiobooks or other text, providing a personalized listening experience.

- Voice-Based Emotional Analysis: Analyze vocal patterns to assess a speaker’s emotional state and provide appropriate responses or support.

- Sound-Based Contextual Information: Provide contextual background sounds or music that align with the conversation’s content, enhancing the storytelling or communication experience.

- Non-Verbal Communication Interpretation: Translate non-verbal vocal cues like tone, pitch, and volume into descriptive text to aid understanding in written communications.

- Voice-Controlled Ambient Sound Adjustment: Automatically adjust ambient sounds in an environment (like reducing background noise) during conversations for clearer communication.

- Speech Disfluency Correction: Smooth out speech in real-time, removing disfluencies like “uhms” and “ahs” for clearer, more professional communication.

- Voice-Activated Sound Effects in Conversations: Introduce relevant sound effects during a conversation (like applause or laughter) based on the dialogue’s context, using voice commands.

- Real-Time Vocal Translation for Singing: Translate songs as they are being sung, maintaining the melody while changing the lyrics to the listener’s language.

- Adaptive Volume Control in Noisy Environments: Automatically adjust the volume of a conversation based on the ambient noise levels, ensuring clear communication even in loud settings.

These are just some of the uses of this technology. Maybe I’ll update this post in a couple of years when the features become available on my phone.

| Application | Description |

|---|---|

| Real-Time Translation | Translate languages in real-time, making communication seamless across different languages. |

| Accent Adaptation | Automatically adjust accents to match the listener’s native dialect for easier comprehension. |

| Voice Cloning for Personalized Audio Books | Clone voices of favorite actors or personalities for a personalized listening experience. |

| Voice-Based Emotional Analysis | Analyze vocal patterns to assess a speaker’s emotional state and provide appropriate responses. |

| Sound-Based Contextual Information | Provide contextual background sounds or music that aligns with the conversation’s content. |

| Non-Verbal Communication Interpretation | Translate non-verbal vocal cues into descriptive text to aid understanding body language. (Needs vision) |

| Voice-Controlled Ambient Sound Adjustment | Automatically adjust ambient sounds during conversations for clearer communication. |

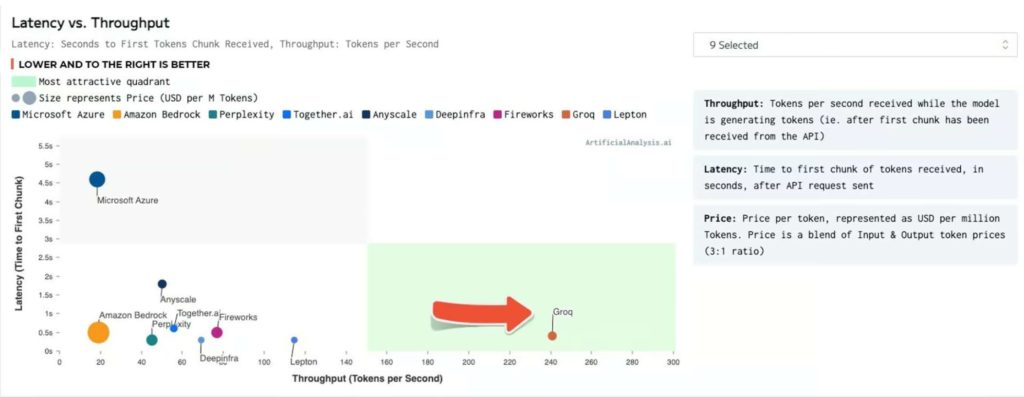

Current AI translation speed

I started writing this and the main problem seemed to be speed. OpenAI and Google language models were slow. You say something, AI interprets it at 10 to 20 words per second. Then there’s latency that can add a couple of seconds before the response starts to come out. Finally, AI gives you the answer, at about the same 10 to 20 words per. Here’s an example of asking directions:

I will say that in about 4-6 seconds: Please translate this to Japanese – Where is the best sushi place in the neighborhood?

2 to 3 second delay… AI will read it in about 4-6 seconds.

この近所で一番良い寿司屋はどこですか?

So, it takes about 6 to 10 seconds for the other side to hear my message in their language.

Then the same process happens in the opposite direction – Please translate this to English: 一番おいしい寿司屋は、広い通りの「活気のある魚」にあります。300メートル先です。

The most delicious sushi restaurant is “Lively Fish” on the wide street. It’s 300 meters ahead.

Let’s say there’s a 5 second delay. It would work for getting around and understanding people when there’s no other way. However, the delays would make a casual conversation tedious and stretched out.

But just when I wrote this Groq came out with new language processing unit (LPU) technology that can increase the speed of processing close to 500 words per second. Also, the latency is in milliseconds. This speed would mean that my conversation partner would hear the AI translation almost instantly after I have completed my sentence.

Mind to mind communication

The AI translation technology I laid out here is possible almost immediately. The noise-canceling and other audio tech is already here. We only have to wait for the AI to get a bit more intelligent.

But just a day before I published this, Elon Musk came out with an announcement that his company Neuralink has successfully transplanted a device directly connected to the human brain.

This technology will open completely another level of possibilities. And not just the next level of possibilities, a whole new game with infinite possibilities.

Would I let Neuralink implant a device into my brain? Not yet.

AI Translation: World Without Language Barriers

In the coming years, language barriers will disappear, enabling seamless global understanding through translation, tone and grammar refinement. AI will enhance verbal interactions, interpret cultural nuances, and non-verbal cues, enriching conversations and making empathy and context integral to our exchanges. Rapid advancements overcome technical limitations, this future of communication is not just a possibility but an imminent reality.